Docker Architecture – Introduction

Starting with an introduction, this article is dedicated to a comprehensive review of Docker architecture. Docker, as many know, is a ground-breaking tool that has significantly reshaped the landscape of software development. Furthermore, by leveraging the intricacies of Docker architecture, it has revolutionized the methodologies we employ to develop, deploy, and scale applications. Central to its success is the consistent environment Docker provides. Consequently, this environment ensures that applications transition and operate seamlessly across diverse stages of development. As we progress in this discussion, we’ll dive deeper into the nuances of Docker architecture. Moreover, by understanding the core components and intricate workflow processes, we aim to shed light on what makes Docker architecture so influential and potent in the modern tech ecosystem. By the end of this article, the goal is to provide readers with a profound understanding of Docker architecture and its pivotal role in today’s software solutions.

Understanding the Core Components of Docker

Docker architecture is a marvel of modern software design. At its core, it comprises several components that work in tandem to provide a seamless containerization experience. Here’s a breakdown:

1. Docker Daemon

The Docker daemon, often referred to as `dockerd`, runs in the background and manages Docker containers. It’s responsible for building, running, and monitoring containers. The daemon listens for Docker API requests and can communicate with other Docker daemons to manage services.

2. Docker Client

When you use commands like `docker run` or `docker build`, you’re interacting with the Docker client. It’s the primary user interface to Docker and communicates with the Docker daemon to execute commands.

3. Docker Objects

Docker objects are the entities that Docker uses to assemble an application. These include images, containers, networks, volumes, and services.

4. Images

Think of Docker images as blueprints. They are lightweight, stand-alone packages that contain everything needed to run a piece of software, including the code, runtime, system tools, and libraries.

5. Containers and Services

Containers are instances of Docker images that run in isolation from each other. Services, on the other hand, are a method to scale containers across multiple Docker daemons.

6. Network

Docker’s networking capabilities allow containers to communicate with each other and with external sources. It provides various network modes, each tailored for specific use-cases.

7. Volumes

Data persistence is crucial in the Docker world. Volumes are Docker’s solution to persisting data generated by and used by Docker containers.

8. Docker Registry

A Docker registry is where Docker images are stored. Docker Hub, a public registry, is widely used, but there are also private registries to store proprietary images.

Docker Daemon and Docker Client

The Heart and Soul of Docker

Docker’s success in the world of containerization can be attributed to its robust architecture. Two of its most pivotal components are the Docker Daemon and the Docker Client. Let’s dive into the intricacies of these components.

Docker Daemon

The Docker Daemon, often simply termed as `dockerd`, is the backbone of the Docker platform. It’s a persistent process that runs in the background on the host system, orchestrating the entire lifecycle of Docker containers. But its responsibilities don’t end there. The daemon is the workhorse that manages other Docker objects, including images, networks, and volumes. When a request is made to create or manage a container, it’s the Docker Daemon that takes charge. It handles tasks such as building images from Dockerfiles, running containers from these images, and ensuring that these containers run seamlessly without conflicts. Furthermore, the Docker Daemon listens for Docker API requests, either from the Docker Client or through other means. It can also communicate with other Docker Daemons, facilitating more complex operations like container orchestration across multiple nodes.

Deciphering the Docker Client

The Docker Client is the user’s gateway to the Docker world. It’s the primary interface through which users interact with Docker, and it’s more versatile than one might initially think.

When you type commands like docker run or docker pull, it’s the Docker Client that springs into action. It translates these commands into REST API calls, which are then sent to the Docker Daemon. This means that the client and daemon can reside on different systems, and a single Docker Client can communicate with multiple Docker Daemons. This distributed nature of Docker is what makes it so powerful for scaling applications across multiple machines or even across data centres.

Moreover, the Docker Client provides feedback and outputs to the user, ensuring they’re always in the loop about the status of their operations. Whether it’s pulling an image from Docker Hub or monitoring the health of a running container, the Docker Client ensures transparency and control.

Demystifying Docker Images

The Building Blocks of Containers

Docker’s rise to prominence in the world of containerization is largely due to its unique approach to packaging software. Central to this approach is the concept of a Docker Image. Let’s delve deeper into understanding this fundamental component.

What is a Docker Image?

Starting with the basics, a Docker Image is comparable to a blueprint for a software application. Furthermore, it encompasses all essential files, libraries, binaries, and other dependencies vital for the software’s operation. Consequently, this guarantees that the application maintains consistent execution across varied environments, thereby addressing the long-standing dilemma of “it works on my machine.” Moreover, an image is inert and immutable, meaning once created, its content remains unchanged.

Key Characteristics of Docker Images

Understanding the nuances of Docker Images can provide valuable insights into their role in the Docker ecosystem:

- Read-Only Nature: Docker Images are read-only. This means that once an image is created, its content cannot be modified directly. This immutability ensures consistency and reliability when deploying applications.

- Layered Architecture: While direct modifications to an image are not permitted, Docker provides a mechanism to introduce changes by adding new layers on top of the existing image. Each modification or addition results in a new layer, and these layers stack on top of each other. This layered approach promotes efficient storage and faster image distribution.

Parent and Child Images: The Hierarchical Relationship

The layering concept in Docker Images introduces a hierarchical relationship between images. The base image, often sourced from trusted repositories like Docker Hub, serves as the foundation. This base image, also known as the parent image, can be used as a starting point to create customized images tailored to specific needs.

When additional layers are added to the base image, a new image is created. This new image, derived from the parent, is referred to as a child image. The child image inherits all the properties and contents of the parent but also includes the new modifications. This parent-child relationship allows for efficient image creation, as child images can leverage the components of their parent, reducing redundancy and ensuring consistency.

Service and Container

Bringing Applications to Life

In the realm of Docker, the terms “service” and “container” often come up. While they are closely related, they serve distinct roles in the Docker ecosystem. Let’s explore these concepts in detail.

Understanding Docker Containers

At its core, a Docker Container is a running instance of a Docker Image. Think of it as the practical application of the blueprint provided by the image. When you want to execute the software packaged inside a Docker Image, you “run” the image, and this action spawns a container.

Here are some key points to remember about Docker Containers:

- Runtime Instances: Containers are the real-time manifestations of Docker Images. They encapsulate the application, its environment, libraries, and dependencies, ensuring that the software runs consistently across different setups.

- Multiplicity: One of the strengths of Docker is its ability to create multiple containers from a single image. This means you can have several instances of the same application running concurrently, each in its isolated environment. This is especially useful for scaling applications, load balancing, and ensuring high availability.

Services

Orchestrating Containers

While a Docker Container is a single instance of an application, a Docker Service is a higher-level concept, especially relevant in the context of Docker Swarm, Docker’s native clustering and orchestration tool.

A Docker Service allows you to define the desired state of a group of containers. For instance, you might specify that a particular application should always have five containers running. If one container fails, the service ensures another one is spun up, maintaining the desired state.

Here’s what you need to know about Docker Services:

- Scaling and Load Balancing: Docker Services simplify the process of scaling applications. By defining the number of container replicas in a service, Docker can automatically load balance the traffic across them, ensuring optimal performance.

- Service Discovery: With Docker Services, containers can easily discover and communicate with each other, facilitating more complex application architectures.

- Rolling Updates: Docker Services support rolling updates, allowing you to deploy new versions of your application without downtime.

In essence, while Docker Containers are the workhorses that run your applications, Docker Services provide the orchestration tools to manage, scale, and maintain these containers, especially in clustered environments.

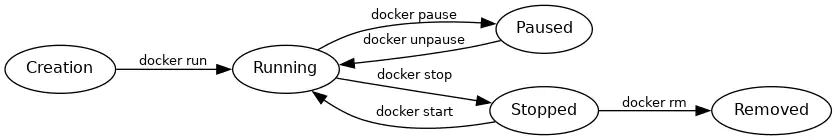

Docker Container Lifecycle:

A Visual Guide

Docker Containers, the heart of the Docker ecosystem, have a specific lifecycle that they follow. This lifecycle dictates how containers are created, managed, and eventually terminated. Understanding this lifecycle is crucial for effectively managing and orchestrating Docker containers.

The Docker Container Lifecycle Explained

- Creation: This is the initial stage where a container is instantiated. It’s created from a Docker image but hasn’t started running yet.

- Running: After creation, the container enters the running state when the

docker runcommand is executed. In this state, the application inside the container is active and operational. - Paused: A running container can be temporarily paused using the

docker pausecommand. In this state, the container processes pause, yet the container stays active. - Stopped: A container can be stopped using the

docker stopcommand. In this state, the container is not running, but its state and data remain intact. It can be restarted using thedocker startcommand. - Removed: Once a container is no longer needed, it can be removed from the system using the

docker rmcommand. This action deletes the container and frees up system resources.

Docker Networking: Connecting Containers

Docker’s ability to isolate applications in containers is revolutionary, but the real magic happens when these containers communicate with each other and the outside world. This is where Docker Networking comes into play. Let’s explore the intricacies of networking.

The Essence of Docker Networking

Networking in Docker is not just an afterthought; it’s a fundamental component. In real-world scenarios, especially at scale, applications often need to interact with other applications, databases, or external services. Docker Networking facilitates these interactions, ensuring data flow across containers and between containers and the host.

A single host can manage multiple containers, establishing a 1:N relationship. Even though each container operates in isolation, they can connect using established network channels.

Modes of Networking in Docker

- 1. Bridge Mode Networking: This is the default networking mode. This mode establishes a private internal network on the host system, and it connects containers to this internal network. They can communicate with each other and the host through a bridge interface. It provides a layer of isolation and security but might introduce a slight overhead due to the bridging process.

- 2. Host Mode Networking: In this mode, the container shares the host’s networking namespace. This means the container uses the host’s network stack directly, bypassing any internal Docker networking. It’s faster since there’s no bridging overhead, but it sacrifices the isolation that comes with other networking modes.

- 3. Container Mode Networking: This mode allows a container to share the networking namespace of another container. Essentially, two containers can share the same IP address, port space, and other network resources. It’s useful for containers that need to work closely together, like a web server and its caching layer.

- 4. No Networking: As the name suggests, containers started in this mode are isolated from all networks. They can’t communicate with the outside world, other containers, or even the host. This mode is useful for containers that need maximum security and isolation, like certain types of security testing or sandboxing environments.

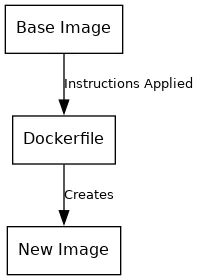

Docker File

Crafting Custom Docker Images

The Docker ecosystem is vast, but at its core lies the concept of images. It builds these images following a set of instructions, and the Docker File provides these guidelines.

What is a Docker File?

A Docker File is essentially a script, but it’s not just any script. The Docker File presents a carefully designed set of directions guiding Docker on building an image. These instructions range from specifying the base image, adding files, setting environment variables, to defining the default executable for the container.

- Base Image: This is the foundation upon which the new Docker image is built. It could be an official OS image like Ubuntu or an application like Nginx.

- Dockerfile: Contains a series of commands and instructions that are applied to the base image.

- New Image: The result of applying the “Dockerfile” instructions to the base image. The system has prepared the new image to run as a container.

Docker Registry and Docker Hub

Central Repositories for Docker Images

Starting with the Docker Registry, commonly dubbed as a repository, it functions as a storage hub for Docker images. Moreover, it acts as a unified spot where developers not only have the liberty to push their bespoke images but also retrieve official or third-party images

Key points about Docker Registry:

- Accessibility: Docker Registries can be public, allowing anyone to access and pull images, or private, restricting access to specific users or teams.

- Push and Pull: Using the

docker pushcommand, developers can upload their custom images to a registry. Conversely, thedocker pullcommand allows users to download images from a registry. - Hosting Options: There are various platforms where a Docker Registry can be hosted. Some of the popular ones include:

- Docker Hub: The official Docker image repository.

- AWS Container Registry: Amazon Web Services’ managed Docker container registry.

- Google Container Registry: Google Cloud’s private container storage.

Docker Hub: The Official Docker Repository

Docker Hub holds a special place in the Docker ecosystem. The Docker File presents a carefully designed set of directions guiding Docker on building an image.

Here’s what you need to know about Docker Hub:

- Vast Collection: Docker Hub boasts a massive collection of both official images from major software vendors and community-contributed images.

- Easy Access: Docker Hub’s repository can be accessed at

index.docker.io. Pulling an image is straightforward. For instance, to pull the ‘helloworld’ image from ‘thedockerbook’, you’d use the command:docker pull thedockerbook/helloworld.

Docker Storage

Managing Data in Containers

Docker’s ability to package and run applications in isolated environments is revolutionary. However, managing data within these containers is equally crucial. Docker Storage manages data efficiently both inside containers and between them.

Storage Drivers: The Backbone of Docker Storage

Docker employs storage drivers to manage the data of containers and images. These drivers handle the details of data storage, ensuring efficient use of system resources.

Key points about storage drivers:

- Container Layer: Beginning with containers, each one possesses a slender writable layer, setting it apart from the image layers that remain strictly read-only. Furthermore, this specific layer diligently records any modifications executed within the container.

- Shared Image Layer: While each container has its unique writable layer, they all share the read-only image layer if they are based on the same image. This ensures efficient use of storage as multiple containers can leverage the same base data.

- Copy-on-Write Mechanism: Central to Docker’s storage efficiency is the copy-on-write mechanism. Docker uses one shared data copy for all containers and only creates a new copy when changes occur in a container.

Deep Dive into Storage Mechanisms

- Copy-on-Write Mechanism: Docker’s engine is designed for efficiency. When launching an image, it doesn’t duplicate the entire image. Instead, it employs the copy-on-write strategy. This means that containers use a shared set of data until modifications occur within the container. This approach not only conserves disk space but also accelerates the start-up process.

- Volumes: Starting with volumes, they represent a fundamental aspect of Docker’s approach to handling data. Primarily, a volume is akin to a directory situated within a container. Crafted using the command, these volumes not only promote data sharing but also its persistence. Moreover, they have the ability to work across many containers, ensuring consistent data and easy access. For example, when two containers require access to an identical dataset, both can conveniently mount the same volume.

Wrapping up our discussion on Architecture.

It’s essential to highlight its transformative impact. Firstly, Docker Architecture stands out as a game-changer in the software world. Moreover, it offers a consistent and efficient way to develop, deploy, and run applications. By doing so, it addresses many challenges developers previously faced.

Furthermore, the beauty of Docker Architecture lies in its simplicity and efficiency. Docker uses a container-based approach. Consequently, applications operate smoothly across varied environments. Furthermore, this uniformity guarantees that developers, testers, and operators collaborate harmoniously. As a result, the chances of encountering errors diminish significantly.

Additionally, Docker Architecture promotes scalability. Initially, as businesses expand and adapt, their software requirements shift. Subsequently, with the aid of Docker Architecture, adjusting to larger or smaller scales turns effortless. Moreover, this adaptability proves to be a blessing for enterprises targeting swift expansion.

Moreover, the community around Docker Architecture is vibrant and supportive. Starting with the community’s inclusivity, it ensures that newcomers can swiftly familiarize themselves with the basics. Furthermore, seasoned experts have the opportunity to persistently hone their skills. Consequently, the cooperative spirit of this community guarantees that Docker Architecture consistently stays ahead in the technological arena.

In conclusion, Docker Architecture is more than just a tool or a process; it’s a revolution in how we approach software. Starting with a commitment to its core values, by adopting Docker’s principles, we set the stage for enhanced, reliable, and adaptable software solutions. Furthermore, as we cast our gaze towards the future, it becomes evident that Architecture will persistently hold a central position in molding the technological horizon.